- Artificial Intelligence

- Machine learning

Establishing and maintaining real-time accuracy, precision prediction capabilities, and rapid processing are absolute necessities for successful ML execution. ML models are deployed to monitor the accuracy and success of a ML project and flag issues or errors. However, the traditional ML model approach is manual, dependent on human ML engineers to update with constantly evolving datasets that are often already out-of-date by the time they’re applied. This standard method also makes it nearly impossible to test version success, run the volume of experiments needed for appropriate measure, or predict outcomes.

Our teams determined that automation was the answer. They developed an MLOps approach to support an ML pipeline infrastructure designed to handle previously manual tasks and data updates. Postindustria’s MLOps combines data extraction and analysis, modeling and testing, and continuous service delivery to train and evaluate models, track testing, and deploy well-performing models.

By building a flexible, scalable, automated structure for each project instead of attempting to implement a pre-made, manual ML model, we improved and streamlined the previously unmanageable, unmeasurable, inefficient ML model functionality, freed up ML engineers, and had consistently reliable, real-time datasets and objective measures of success.

A year ago we lost a potential client. A company declined our machine learning (ML) project proposal to automate sifting through abusive content on display ads. Neither a decade of experience in delivering solutions for the AdTech industry nor a team of certified ML engineers helped my team close that deal. We realized that our proposal must be lacking something but couldn’t figure out exactly what was missing.

Months later, we discovered our weak spot. We’d been deploying a ready-to-use trained machine learning model that was inflexible, inefficient, and ultimately, drained value.

We could do better.

We reviewed our entire ML project management approach and discovered that by building an infrastructure for its training, evaluation, tuning, deployment, and continuous improvement, we gained control and added structure to the critical R&D process.

In this article, I’ll share with you what I wish we had known about the ML process back then, outline the machine learning pipeline steps, and explain why its delivery is the most viable option to bring business value to its users in any domain — from AdTech to healthcare.

Machine learning is a branch of computer science that uses algorithms to build and train models to perform routine tasks based on detecting patterns in the data and learning experience.

ML relies on a host of data samples that are used to train a computer to perform certain tasks that are usually well-performed by humans but are poorly handled by traditional computer programs.

The tasks for ML projects can be grouped into two big categories: classification or regression algorithms.

Classification tasks allow us to predict or classify discrete values such as Safe or Unsafe, Suspicious or Unsuspicious, Spam or Not Spam, etc.

The tasks related to regression algorithms are used to predict such continuous values as price, age, and salary.

These tasks can be grouped into smaller subcategories that lean on either of the above algorithms: object detection, image classification, anomaly detection, ranking, and clustering.

Spotting abusive content is a classic example of a classification task, while a task to predict a stock price at a given time, for example, falls into the category of tasks related to regression algorithms.

In healthcare, algorithms can be trained to classify X-Ray, MRI scans or any other medical images to detect potentially malignant lesions, tumors in human organs and eventually help in early disease diagnosis. The applications of machine learning in healthcare go far beyond image classification and include automatic health report generation, smart records generation, drug discovery, patient condition tracking, and more.

Regardless of what type of task you deal with, you’ll need to deliver a trained machine learning model to solve it.

But a model alone is not enough. And here is why.

A common approach to managing ML projects leans on deploying ML models manually and usually follows these steps:

Below you can see the breakdown of the manual process of working with ML projects as perceived by Google Cloud services.

This workflow suggests that a trained model should be the final result or a delivery artifact of a successfully completed ML project.

However, this common ML approach is flawed for several reasons.

To optimize the metrics for test data, room for numerous experiments is needed. Experimenting with different model architectures, preprocessing code, and the hyperparameters that define the success of the learning process essentially re-trains the model multiple times. Here is how it works:

Through this process, the training dataset changes and the architecture of the model changes. On top of that, an enormous number of experiments need to be carried out to achieve an acceptable level of accuracy. Carrying out this task manually is onerous, which is why it makes sense to deliver an automated infrastructure for the process — a machine learning pipeline.

A machine learning pipeline is a robust infrastructure that systematically trains and evaluates models, tracks experiments, and deploys well-performing models.

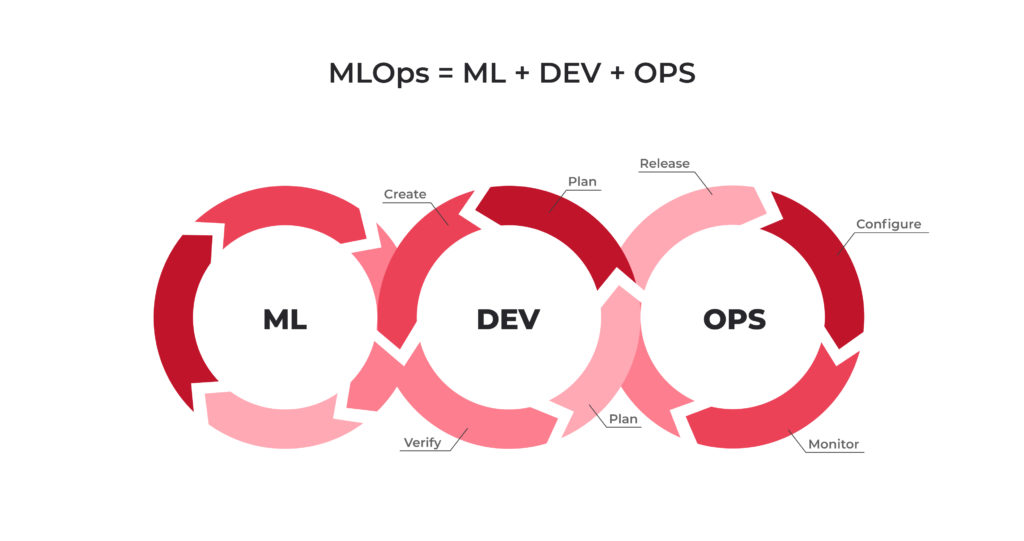

It relies on the idea of MLOps — a set of practices that ensure the implementation and automation of continuous integration, delivery, and training for ML systems.

MLOps combines data extraction and analysis (ML), modeling and testing (Dev) and continuous service delivery (Ops).

However, while MLOps works both with small projects and big data, our suggested pipeline is more suitable for datasets and models that fit on a conventional hard drive (usually their size does not exceed several hundred GB).

A machine learning pipeline consists of the following steps:

This workflow allows for continuous fine-tuning of existing models alongside constant performance evaluations. The biggest advantage of this process is that it can be automated with the help of available tools.

For med-tech startups, this means that they can get an ML model for their particular project that will be constantly updated as more patient data comes in, which will result in higher accuracy of the received results.

Thank you for reaching out,

User!

Make sure to check for details.

To build an infrastructure for a machine learning pipeline and automate the process, the following components are needed (we prefer the Google Cloud Platform, but similar infrastructures are provided by other cloud vendors like AWS or Microsoft Azure):

In addition to these necessary components for building your ML pipeline, you might want to experiment with some other tools that can also be helpful: DVC (for saving new datasets and iterations of trained models), Jenkins (for automation and control) and Docker containers for deployment.

Clients looking for ML-driven solutions for their business receive a fully-fledged ML system instead of a trained ML model that needs revisiting.

With a deployed ML pipeline, the client will not have to keep returning to the team of ML engineers each time the model needs a substantial update. However, some level of technical expertise in machine learning will be required for maintenance.

The automation of the process is another significant benefit that speeds up the deployment of an ML model. Crucially it alleviates the burden of manual tasks.

These machine learning pipeline steps take project management in machine learning to a new level, resulting in benefits both for clients and developers. Postindustria offers a full range of services for the delivery of solutions based on machine learning. Leave us your contact information in the form above and we’ll contact you to discuss your project.